Introduction

Ever wondered how to turn a simple natural language question into a valid SQL query and get an answer from a live database? During my internship, I worked on a project using Mastra framework and the Peaka MCP server to build a conversational agent that understands user questions, writes SQL, and retrieves answers from Peaka’s sample data. In this blog, I’ll walk you through what I built, and how it works.What is an MCP Server?

While Mastra lets you build agents, those agents often need external tools like querying a database, fetching files, or calling APIs. That’s where the MCP server comes in. MCP stands for Model Context Protocol. An MCP server is a standardized interface that exposes tools or data sources to agents. The agent doesn’t need to know how a database works or where an API is hosted. For example, in my project, the Peaka MCP server exposed the following tools:peaka_schema_retriever: Retrieves metadata and schema infopeaka_query_golden_sqls: Queries question/SQL pairs from Peaka’s storepeaka_execute_sql_query: Executes SQL queries on Peaka

Prerequisites

To build and run this project, you will need:- Node.js installed

- LLM provider or API key: To power the agents, you need an LLM provider API Key. For example, get one from OpenAI.

- Peaka Project API Key: To use Peaka tools via the MCP server, you need to create a Project API Key from your Peaka workspace. You can follow the steps in the Peaka documentation to generate it.

- Packages: Install the following packages:

Tech Stack

| Technology | Description |

|---|---|

| Peaka | A zero-ETL data integration platform with single-step context generation capability |

| OpenAI | An artificial intelligence research lab focused on developing advanced AI technologies. |

| Mastra | A modular framework for building AI agents with composable tools, natural language interfaces, and multi-channel deployment support. |

| Vercel | A cloud platform for frontend frameworks and serverless functions, enabling fast and scalable web app deployments. |

| Next.js | The React Framework for the Web. Next.js was used for building the chatbot app. |

| assistant-ui | An open-source chat interface designed to connect easily with Mastra agents. |

Why Peaka?

Peaka is a no-code data platform that allows you to:- Connect to APIs, databases, and SaaS tools without integration code,

- Model external data as virtual tables without moving or duplicating it,

- Query data using SQL and expose it through ready-to-use API endpoints.

- Providing sample datasets that could be queried instantly,

- Understanding the data schema to support structured, SQL-based querying,

- Returning reliable results through a single API call, without building a custom backend.

Why Mastra?

Mastra is a developer-friendly, open-source framework for building AI applications, especially ones that rely on large language models (LLMs). It’s written in TypeScript and provides everything you need to build intelligent agents.TL;DR

If you want to skip the implementation details, check out the finished code on GitHub. Follow the instructions in the README to run the project locally. You can also try the live demo.How to Use Peaka with Mastra via its MCP Server Tools

Building the Peaka Agent

First, set the environment for Peaka API and OpenAI API keys in a.env file:

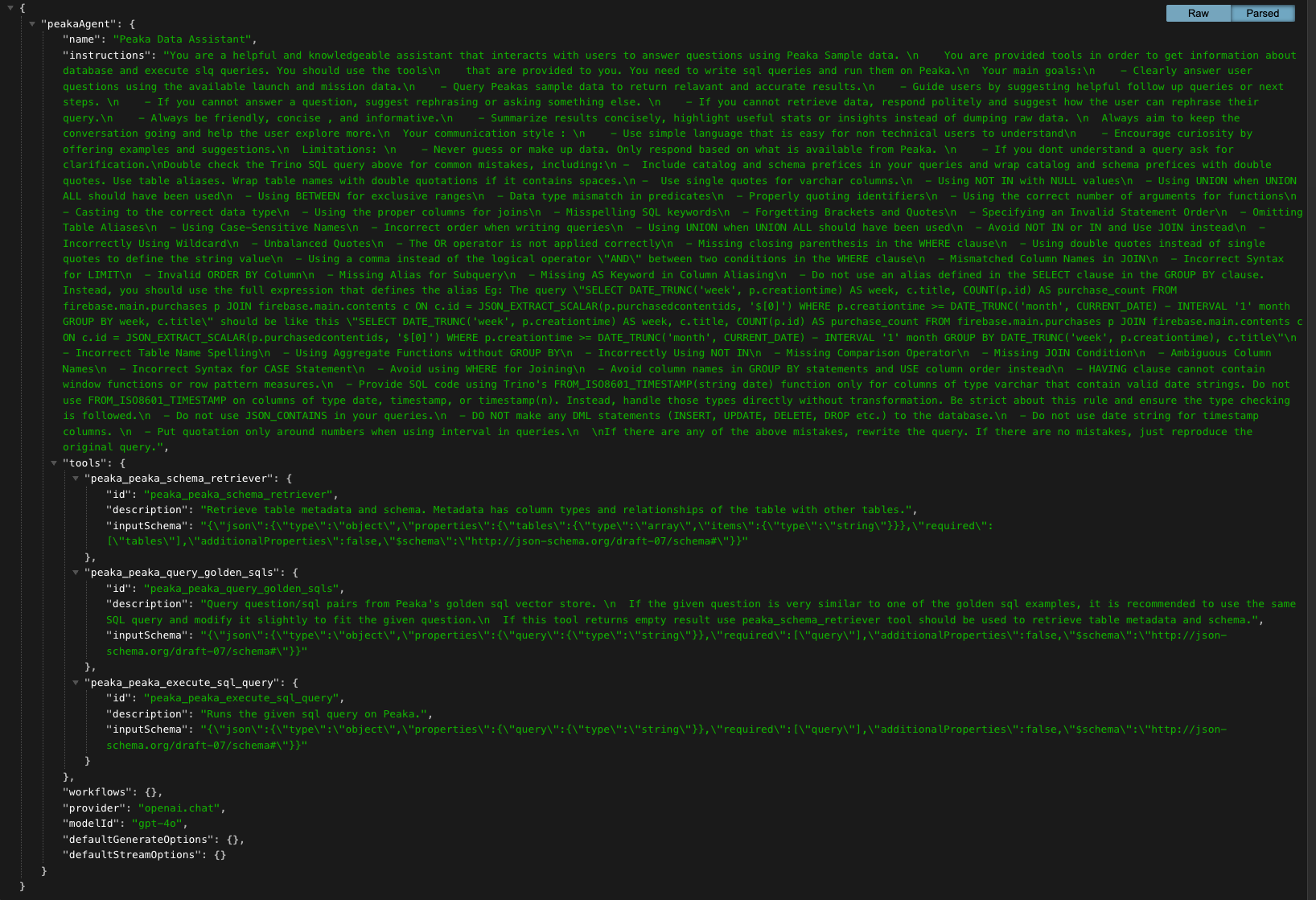

peakaAgent. It uses OpenAI’s gpt-4o-mini model, connects to the tools exposed by the Peaka MCP server, and stores memory in a local SQLite database.

Here’s how the agent is instantiated:

Import required packages in agent.ts file:

Why Customize the Instructions?

By default, Mastra agents work well with basic prompting. But I found that explicit instructions help guide the LLM more reliably and make a big difference in consistency. To improve the assistants’s performance, I manually tested different user questions and tweaked instructions to fix edge cases.Injecting SQL Rules Resources from the MCP Server

Peaka’s MCP server doesn’t just expose tools like SQL execution and data retrieval. It also provides resources, including a rule set that guidelines how to write valid SQL using Trino, which is the SQL engine Peaka relies on. To make the assistant more accurate, I pulled in these rules dynamically and added them to the agent’s instructions. By including the following function in mcp.ts file:Running the Chatbot

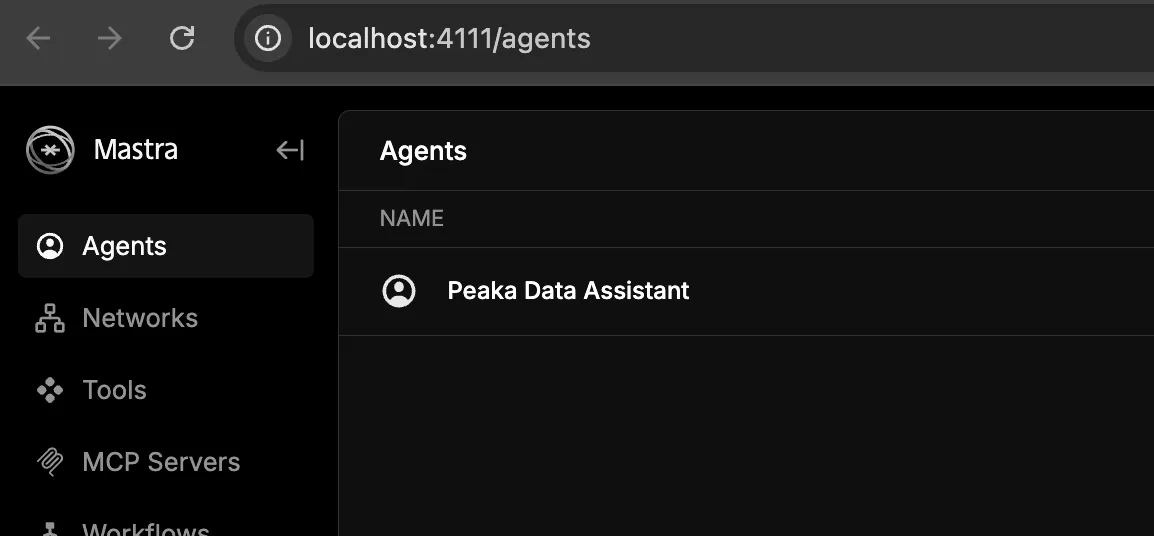

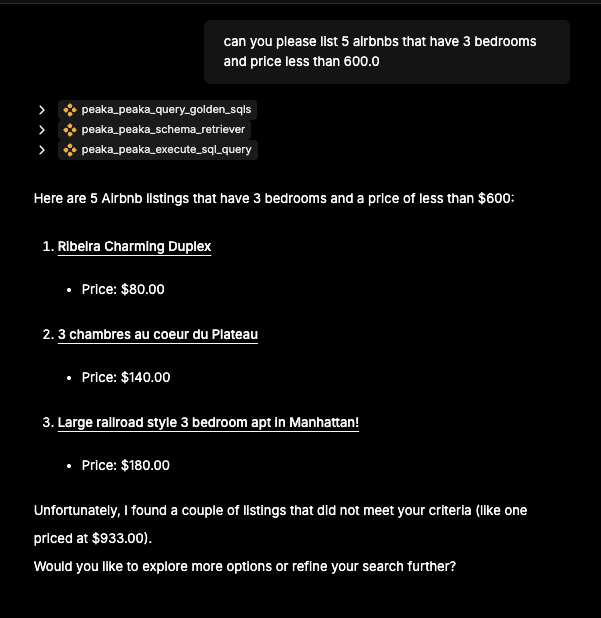

Open that link in your browser to access the Mastra Playground, where you can click on “Peaka Data Assistant” and interact with your agent by testing queries from Peaka sample data. In the following example, I asked the assistant to list 5 Airbnb listings with exactly 3 bedrooms and a price under $600.

The assistant parsed the natural language request, generated the appropriate SQL query, and returned relevant results, filtering out any listings that didn’t match. This shows how easily users can interact with the system using plain language while still getting precise, data-driven results.

In the following example, I asked the assistant to list 5 Airbnb listings with exactly 3 bedrooms and a price under $600.

The assistant parsed the natural language request, generated the appropriate SQL query, and returned relevant results, filtering out any listings that didn’t match. This shows how easily users can interact with the system using plain language while still getting precise, data-driven results.

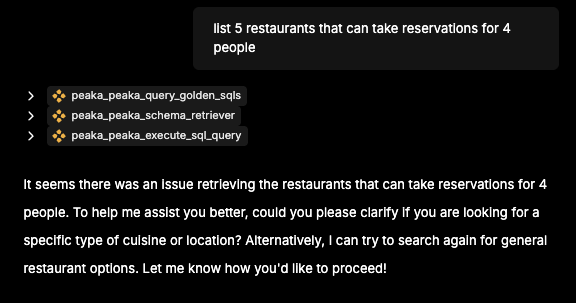

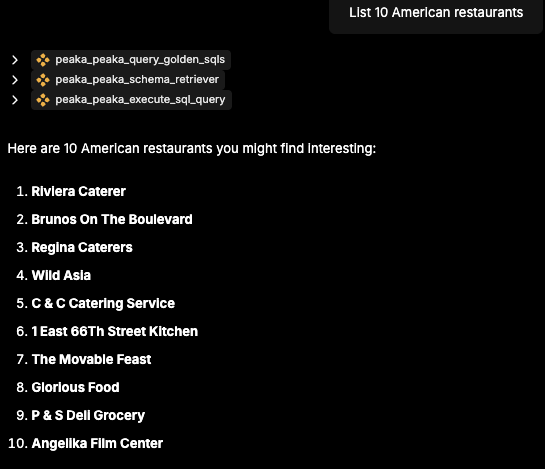

When exact matches aren’t found, the assistant prompts the user for clarification, helping refine the query for more accurate results.

When exact matches aren’t found, the assistant prompts the user for clarification, helping refine the query for more accurate results.

Deploying to Vercel

Vercel is a cloud platform that makes it easy to deploy frontend and backend applications. For this project, we can use Vercel to host and serve our Mastra agent API, making it accessible online with just a few configuration steps.Step 1: Add the Vercel Deployer to your Mastra Project:

in index.ts, import the Vercel Deployer which handles the API routing and deployement structure Vercel expects:Step 2: Patch The MCP Client for Vercel

In your mcp.ts file, before creating the MPCLient, add the following lines: This validated that the Vercel deployment was not only live but also correctly integrated with the Peaka toolset.

This validated that the Vercel deployment was not only live but also correctly integrated with the Peaka toolset.

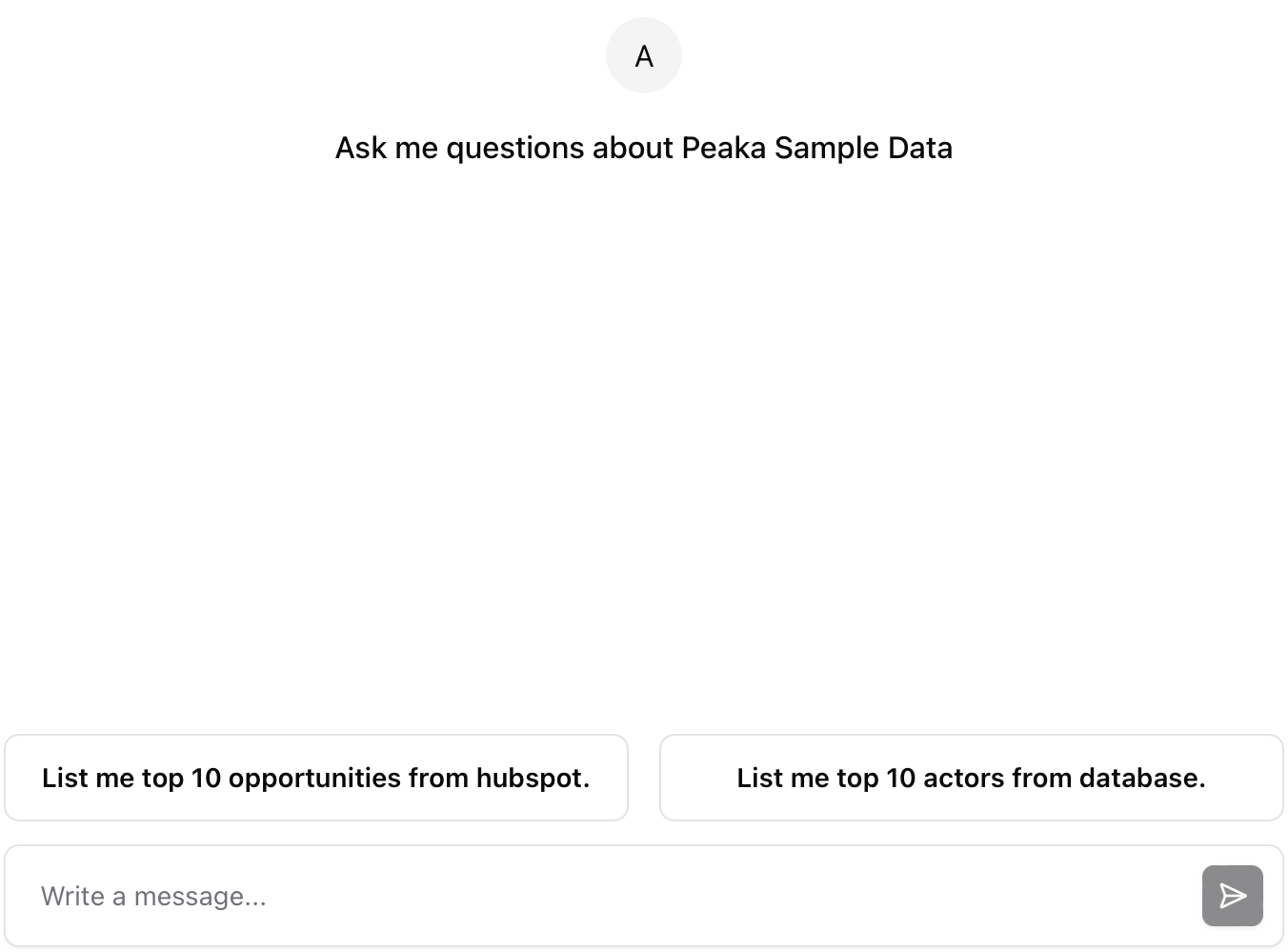

Step 3: Building the Chat Interface with Assistant-UI

To provide a user friendly interface for interacting with my Mastra agent, I used assistant-ui , which is an open source frontend built specifically for Mastra deployments. I connected it to my Vercel-hosted Mastra Backend, allowing real time responses from deployed agent. This setup made it simple to test and showcase the Peaka agent’s capabilities without building a custom frontend from scratch since Vercel doesn’t offer it. You can access The Peaka assistant-UI finished code on Github. Follow the Readme instructions to run the project.

Key Takeaways

- Peaka simplifies data access: Query real data without setting up ETL, modeling it as virtual tables to the agents access and let it do its work. Peaka MCP server is backed by tools that enables agents to query structured data using natural language.

- Mastra provides a smart interface: With Mastra powering the logic and assistant-ui providing the frontend, you can build and deploy conversational agents in minutes.

- Vercel makes it production-ready: Deploy your agent as a server-less API with zero config and instantly share it online.