Prerequisites

Before adding a custom LLM model, make sure you have the following ready:- API Key: The authentication key issued by your LLM provider.

- Base URL (if applicable): The endpoint through which requests to the model will be made.

- OpenAI and Azure OpenAI

- Google Gemini

- Alibaba Cloud Qwen

Model Roles

In Peaka, each model you add is assigned a role based on how it will be used. Currently, we support two types of roles:| Role | Purpose | Example Usages |

|---|---|---|

| Agent / Chat | Text-to-SQL generation | Show me the top 10 customers by revenue → model generates the SQL query. |

| Embedding / RAG | Retrieval-Augmented Generation (RAG) | Store embeddings of table names & metadata → system retrieves context before SQL. |

How they work together

- Agent / Chat models handle natural language to SQL conversion.

- Embedding / RAG models enhance accuracy by retrieving the most relevant tables and metadata before query generation.

Adding a Custom Model

Follow these steps to add a custom LLM model to Peaka:-

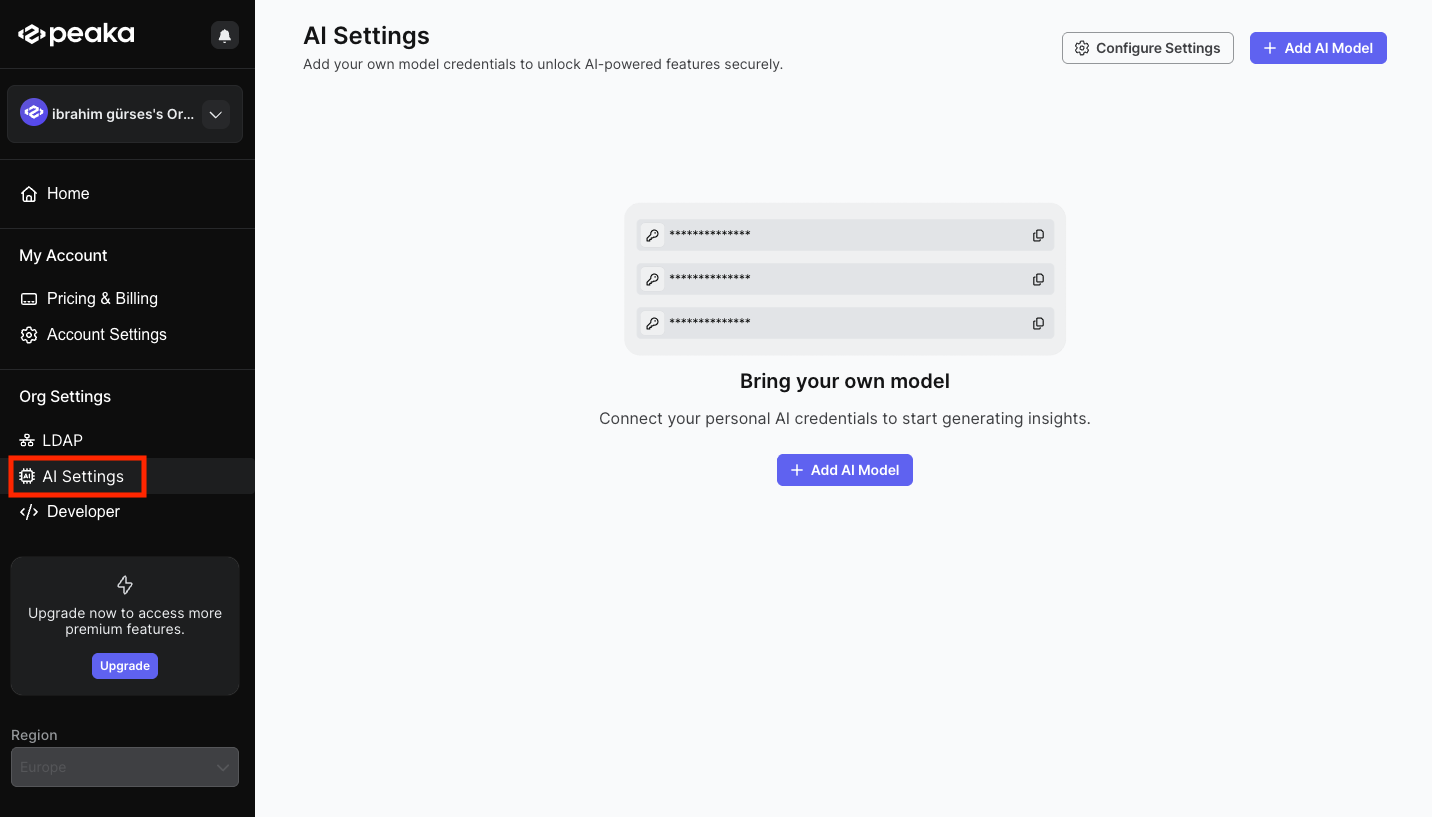

Navigate to AI Settings

- Go to Organization Settings → AI Settings.

-

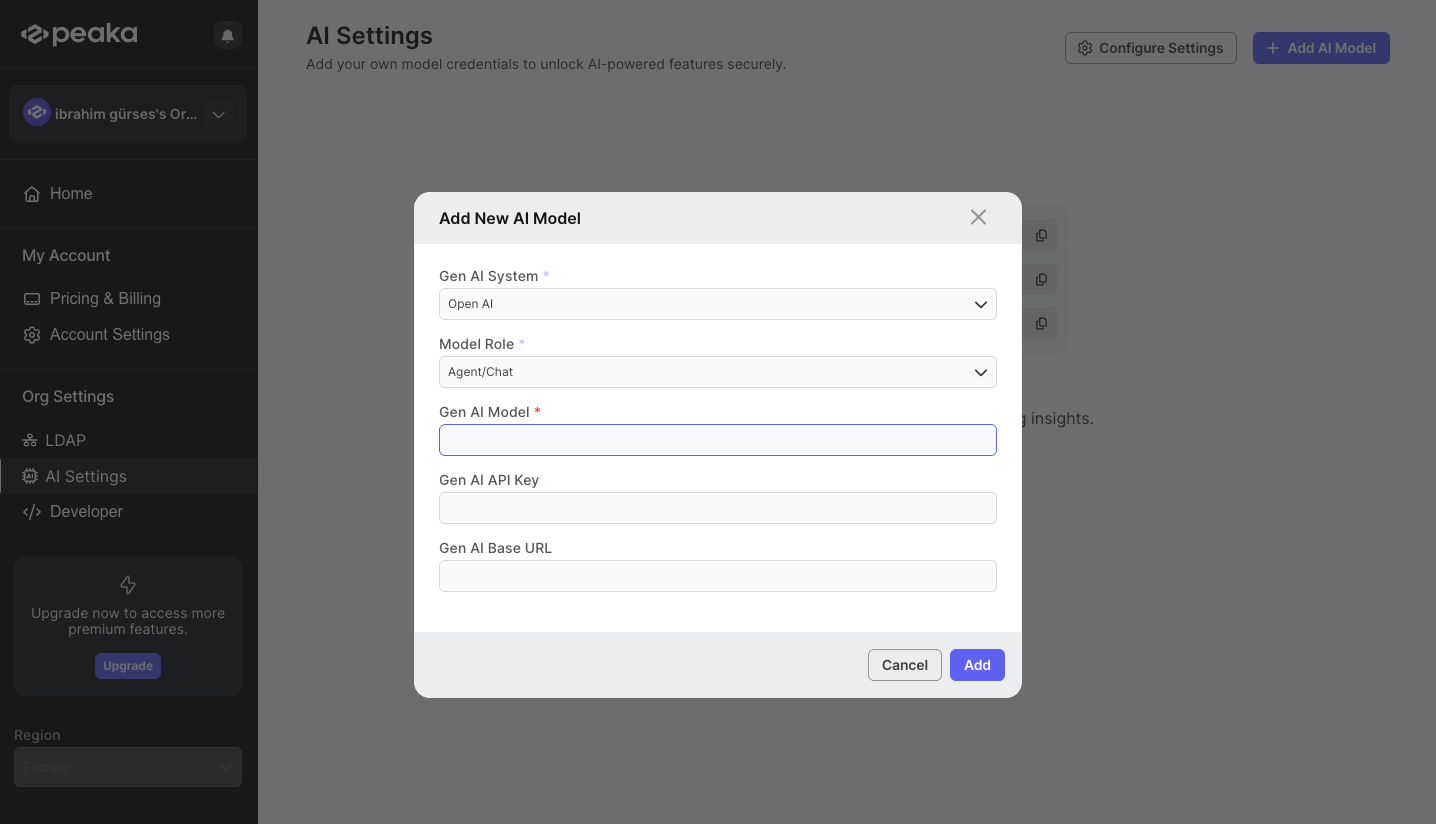

Click “Add AI Model”

- Locate and click the Add AI Model button to open the form.

-

Fill out the Add AI Model Form

The form contains the following fields:

- Gen AI System – Select the provider (e.g., Google Gemini, OpenAI, Alibaba Cloud Qwen).

- Model Role – Choose the role of the model: Agent/Chat or Embedding/RAG.

- Gen AI Model – Specify the model name/version to use.

- Gen AI API Key – Enter the API key issued by your provider.

- Gen AI Base URL – Enter the base URL/endpoint if required by the provider.

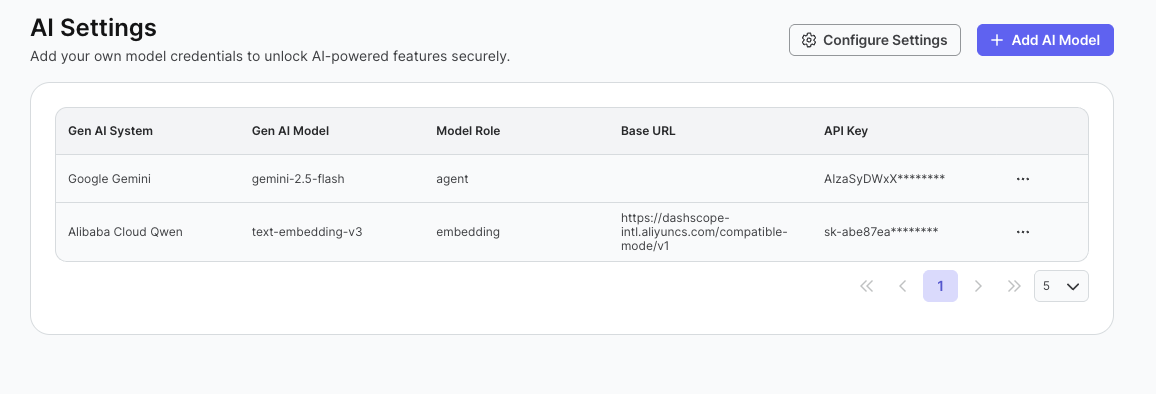

- Save and Validate

- Click Save.

- Peaka will automatically test your credentials.

- If the credentials are valid, the model will be added and ready to use.

- If invalid, an error message will guide you to correct the information.

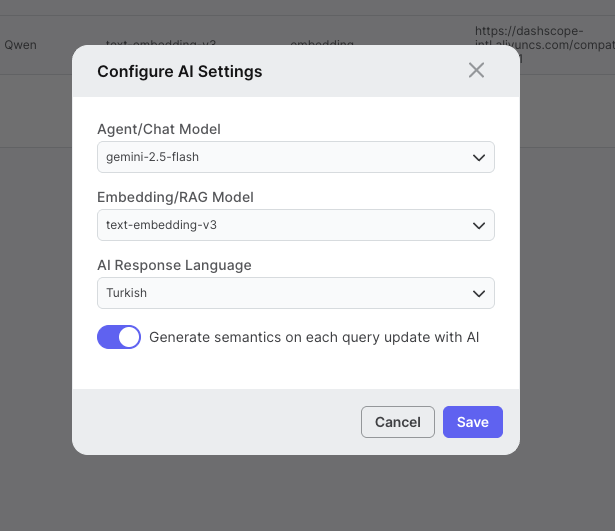

Configuring Model Usage

Once you’ve added your custom AI models, you can configure Peaka to use them instead of the default models.- Configure the Settings Form The form contains the following fields:

| Setting | Description |

|---|---|

| Agent/Chat Model | Select the model to use for chat and text-to-SQL agent features. |

| Embedding / RAG Model | Select the model to use for retrieval-augmented generation and metadata retrieval. ⚠️ Changing this model will trigger reindexing of semantic metadata, which may take some time. |

| AI Response Language | Set the global language for AI responses across the system. |

| Generate Semantics on Each Query Update with AI | Toggle On/Off. When enabled, Peaka will automatically generate semantic metadata whenever a new query is executed. |

- Save Settings

- Click Save to apply your configuration.

- Peaka will start using your selected AI models according to the roles you’ve assigned.

Monitoring Token Usage of Models

Peaka allows you to monitor the usage of your AI models, including tokens consumed during operations. Follow these steps to access the monitoring page:-

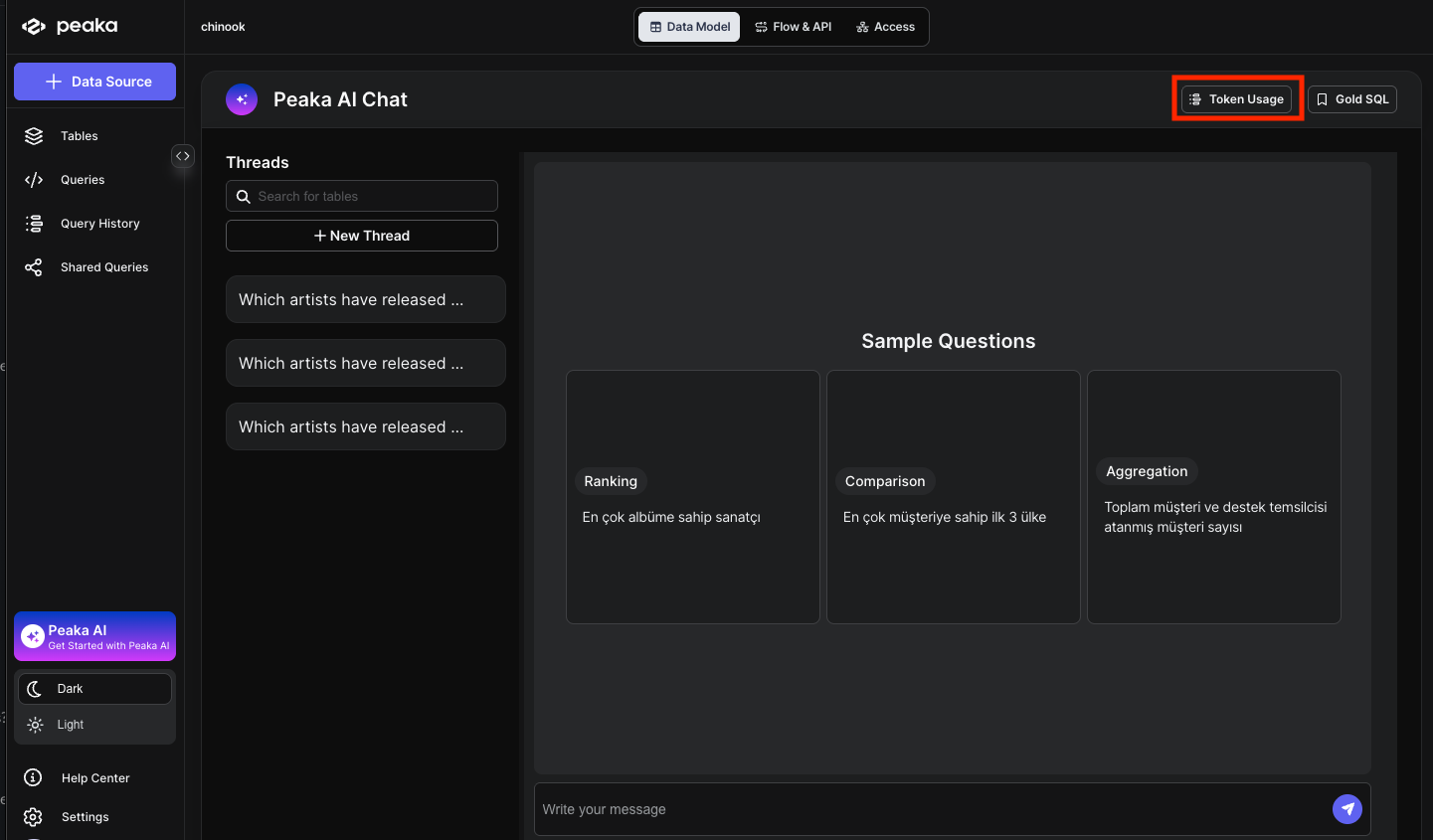

Navigate to the AI Page

- Go to Peaka AI in the main navigation.

-

Open the Token Usage Table

- Click the Token Usage button.

- The LLM Monitoring Table will open, displaying detailed usage information.

- Click the Token Usage button.

-

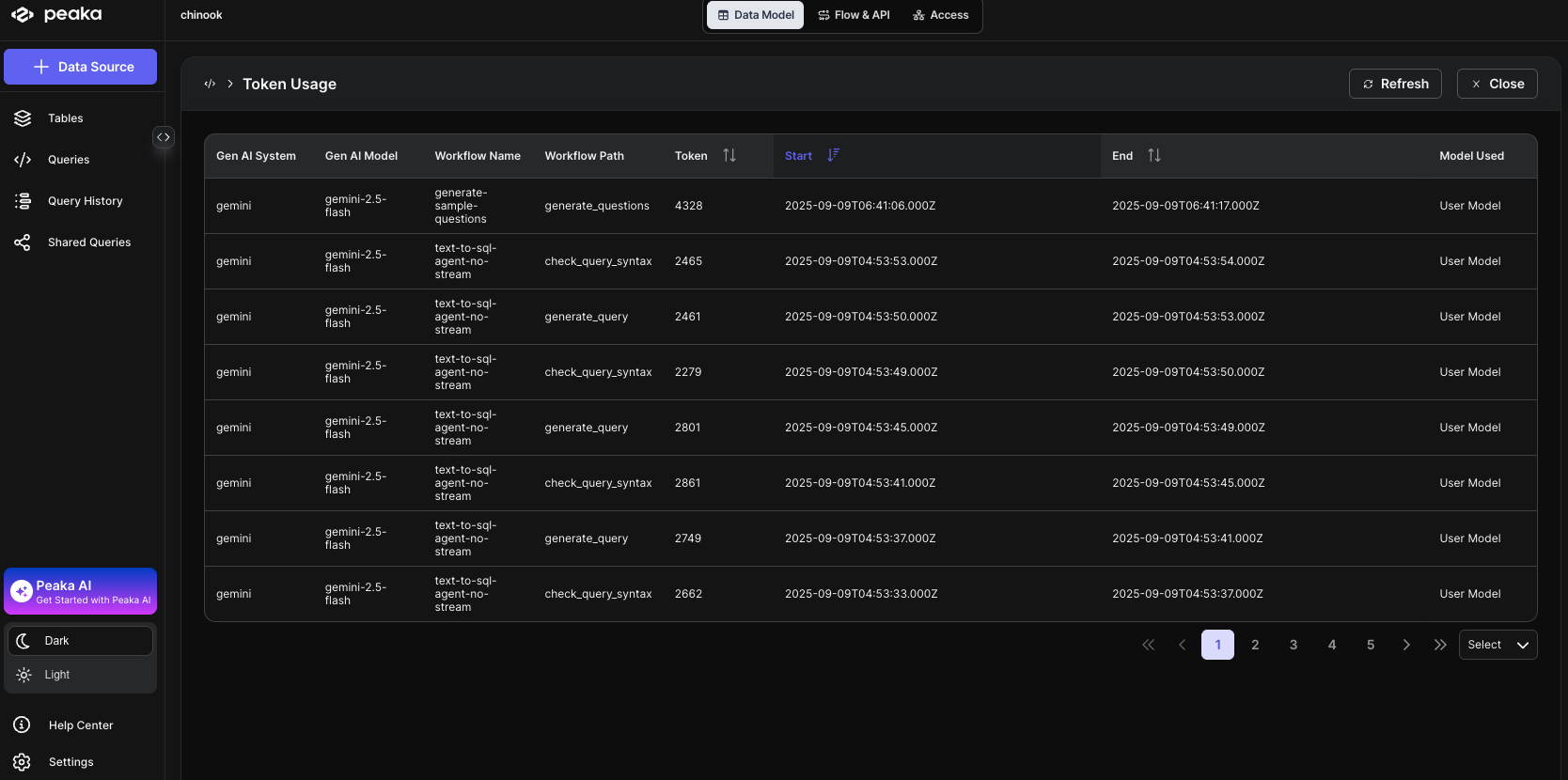

Understanding the Table Fields

Field Description Gen AI System The AI system used (e.g., OpenAI, Google Gemini). Gen AI Model The specific model used for the operation. Workflow Name The main AI workflow associated with the operation. Workflow Path The path of the subtask within the AI workflow. Token Total number of tokens consumed by Peaka for the operation. Start Time Timestamp when the operation started. End Time Timestamp when the operation ended. Model Used Indicates whether the User Model or Peaka Model was used.